Streamlit is a popular open-source Python library that allows you to build web applications. It’s user-friendly and has a clean interface, making it a great choice for developers. One of the many great things about Streamlit is that it can easily integrate with various databases, like Snowflake.

Snowflake is a cloud-based data warehouse that is designed to be highly scalable, efficient, and secure. When integrating Streamlit with Snowflake, there are some best practices to follow to optimize performance and ensure efficient usage.

Best Way to Connect Snowflake:

For connecting Streamlit with Snowflake, Snowflake provides a powerful method to do this from your Python application, the Snowflake-Connector-Python(package). The Snowflake Python Connector allows the application to submit SQL to execute inside Snowflake.

To connect to Snowflake, you’ll need to install the required libraries and import them. Here’s an example:

import snowflake.connector #pip install snowflake-connector-python

Connecting Snowflake with a secret file in Streamlit involves securely storing your Snowflake credentials, such as your account name, username, password, and other connection parameters, in a separate file that is not accessible to others. Here’s how to connect Snowflake with a secret file in Streamlit:

Create a secrets file, such as secrets.toml file inside the .streamlit folder, that contains your Snowflake connection information. Here’s an example of what the file might look like:

user = “user name”

password = “password”

account = “account url”

warehouse = “warehouse name”

database = “database name”

schema = “schema name”

On the other hand, .gitignore is a folder that contain files that should be ignored by Git when committing your code to git. This is useful when there are files in the project that do not need to be committed to Git, such as temporary files.

Snowflake is a cloud-based data warehouse that is designed to be highly scalable, efficient, and secure. When integrating Streamlit with Snowflake, there are some best practices to follow to optimize performance and ensure efficient usage.

1. Use a dedicated Snowflake service account:

To interact with Snowflake, use Snowflake Python Connector. It’s a lightweight package that enables the efficient loading of data from Snowflake into your Streamlit application. This can help reduce latency, leading to faster query response times.

Optimizing queries is a critical step to improve the performance of your Streamlit application. Ensure that you only query the data you need and avoid processing unnecessary data. It’s also a good idea to use indexes on columns that are frequently used in your queries, as this can help speed up query response times.

Streamlit has caching features like cache_data and cache_resource that allows you to save the results of computationally expensive queries. This is useful when you have a query that takes a long time to execute and may need to be run repeatedly. By caching the results, you can save time and resources.

The cache_data is used within the st.cache function to specify how long the cached data should be stored in memory.

The cache_resource argument is used within the st.cache function to specify how the cached resource should be stored and retrieved. For database connections and sql queries use cache_resource.

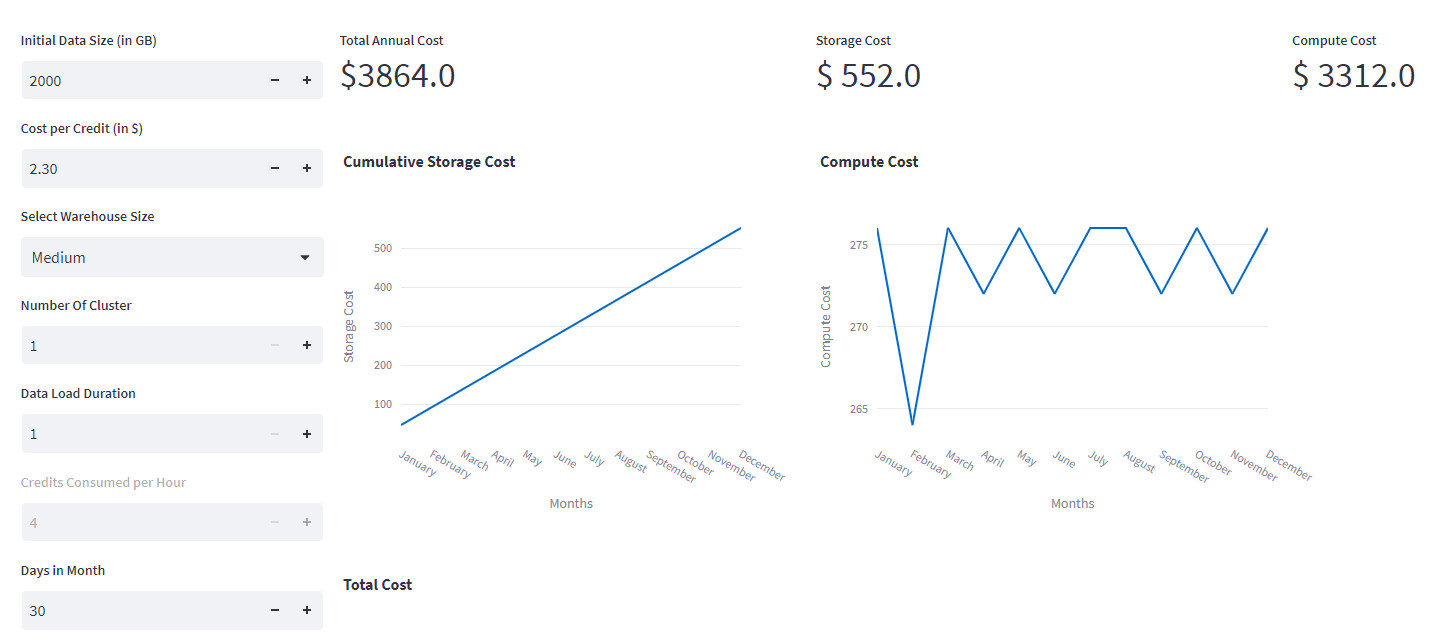

Monitoring resource usage is crucial for ensuring that your Streamlit application runs smoothly. Use the Snowflake web interface to monitor query and compute usage and ensure that you’re not exceeding your account limits. Additionally, use Streamlit’s profiling features to identify any performance bottlenecks in your application.

By default, Streamlit application reruns every time when we enter a value and it’s a big problem when we are dealing with huge amount of data, to avoid this we have a concept of Streamlit forms.

For login pages and other input widgets use Streamlit forms. It comes with a button, when button is pressed, all widgets’ values inside the form will be sent to Streamlit in a batch. By this, we can avoid the rerunning issue.

import streamlit as st

with st.form(key = ‘demo’ ):

value1 = st.number_input(‘Enter first value’)

value2 = st.number_input(‘Enter second value’)

submit_button = st.form_submit_button(label =’Submit’)

if submit_button:

value1 + value2

2. Streamlit Cache:

Streamlit cache is a simple caching mechanism that allows to store the results, so that results are only executed once and then stored in memory for subsequent use. This can significantly improve the performance of your applications, particularly when working with large datasets.

Streamlit has caching features like cache_data and cache_resource that allows you to save the results of computationally expensive queries. This is useful when you have a query that takes a long time to execute and may need to be run repeatedly. By caching the results, you can save time and resources.

For storing results use cache_data.

For database connections and SQL queries use cache_resource.

import streamlit as st

import pandas as pd

@st.cache_data

def load(data):

df = pd.read_csv(data)

df = load(’emp.csv’)

st.dataframe(df)

connecion = database.connect()

@st.cache_data

def query():

return pd.read_sql_query(“select * from table”,connection)

Session states in Streamlit are variables that are remembered across user interactions. When a user interacts with a Streamlit application, they can change the state of the application by selecting different inputs or interacting with different components. Session states allow you to store and update this state in real-time, and to use this state to update the visualizations and components of the application.

import streamlit as st

import pandas as pd

import snowflake.connector

username = ‘enter user name‘

password = ‘enter password‘

account = ‘enter account url‘

if “username” not in st.session_state:

st.session_state[“username“]=username

if “password” not in st.session_state:

st.session_state[“password“]=password

if “account” not in st.session_state:

st.session_state[“account“]=account

if st.button(“connect“):

try:

con = snowflake.connector.connect(

user=username,

password=password,

account=account

)

st.success(“Successfully connected to snowflake“)

except:

st.error(“fail“)

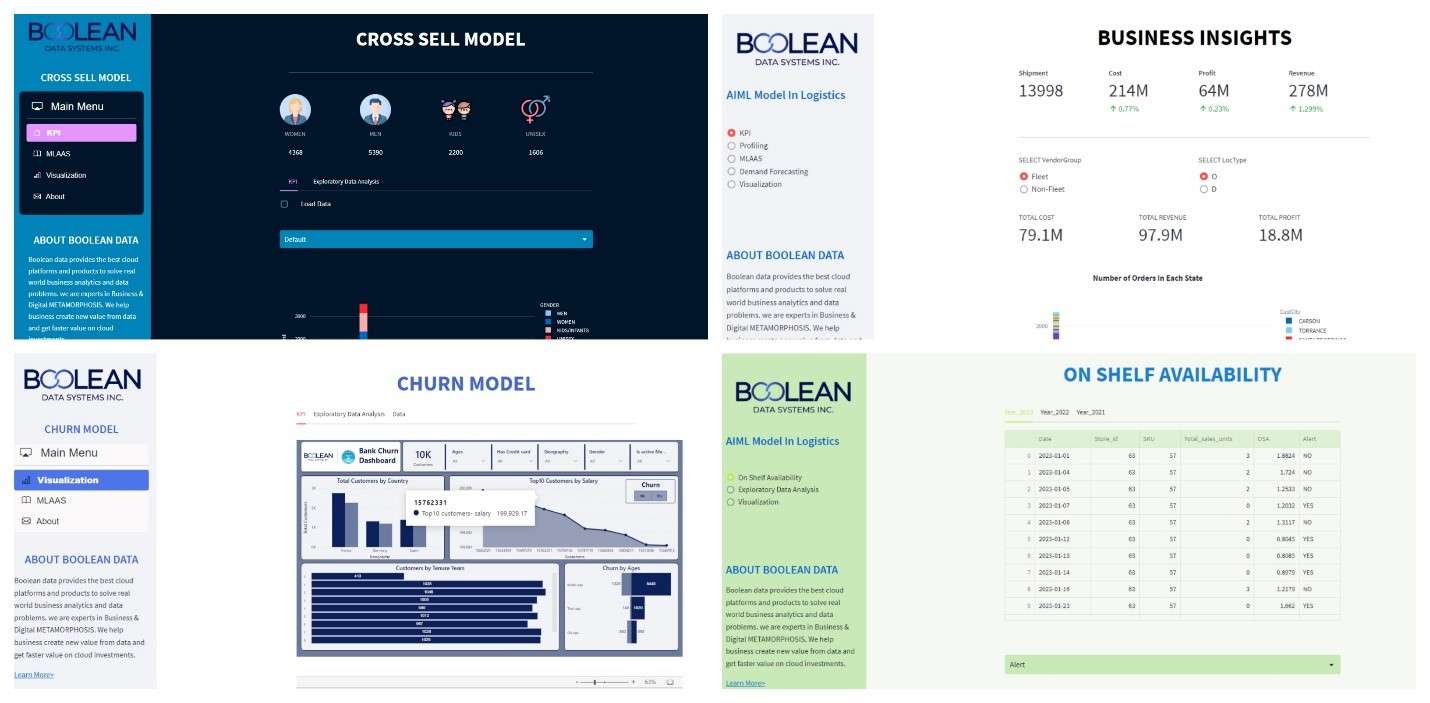

2. Streamlit for Data Science:

Conclusion:

Streamlit developers can create powerful and user-friendly applications that leverage the capabilities of Snowflake’s cloud data platform. These best practices are essential for developing applications that provide smooth user experiences and effectively convey insights derived from Snowflake data.

About Boolean Data

Systems

Boolean Data Systems is a Snowflake Select Services partner that implements solutions on cloud platforms. we help enterprises make better business decisions with data and solve real-world business analytics and data problems.

Services and

Offerings

Solutions &

Accelerators

Global

Head Quarters

1255 Peachtree Parkway, Suite #4204, Alpharetta, GA 30041, USA.

Ph. : +1 678-261-8899

Fax : (470) 560-3866