Data ingestion in Snowflake refers to the process of loading data from various sources into Snowflake’s cloud-based data warehouse. Snowflake provides various ingestion patterns that allow users to load data from a wide range of sources.

In this blog, we will explore some common data ingestion patterns.

Let’s go through some of the top Snowflake cost control techniques in this blog article.

- 1. Bulk Data Ingestion

- 2. Continuous data loading (Snow pipe)

- 3. Data Ingestion using Web Interface

- 4. Loading Data from Apache Kafka

- 5. Loading using partner connect tools

Snowflake allows bulk data ingestion using the COPY command. This command loads large volumes of data from various sources.

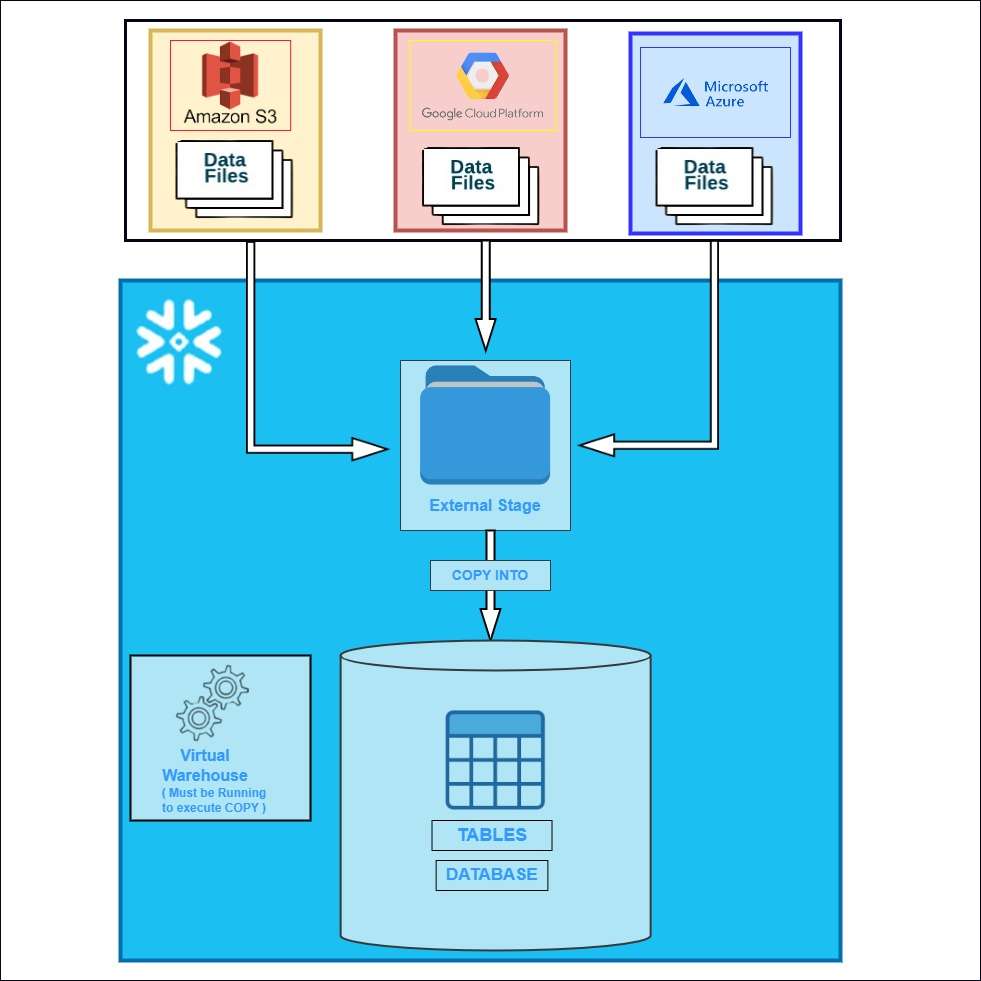

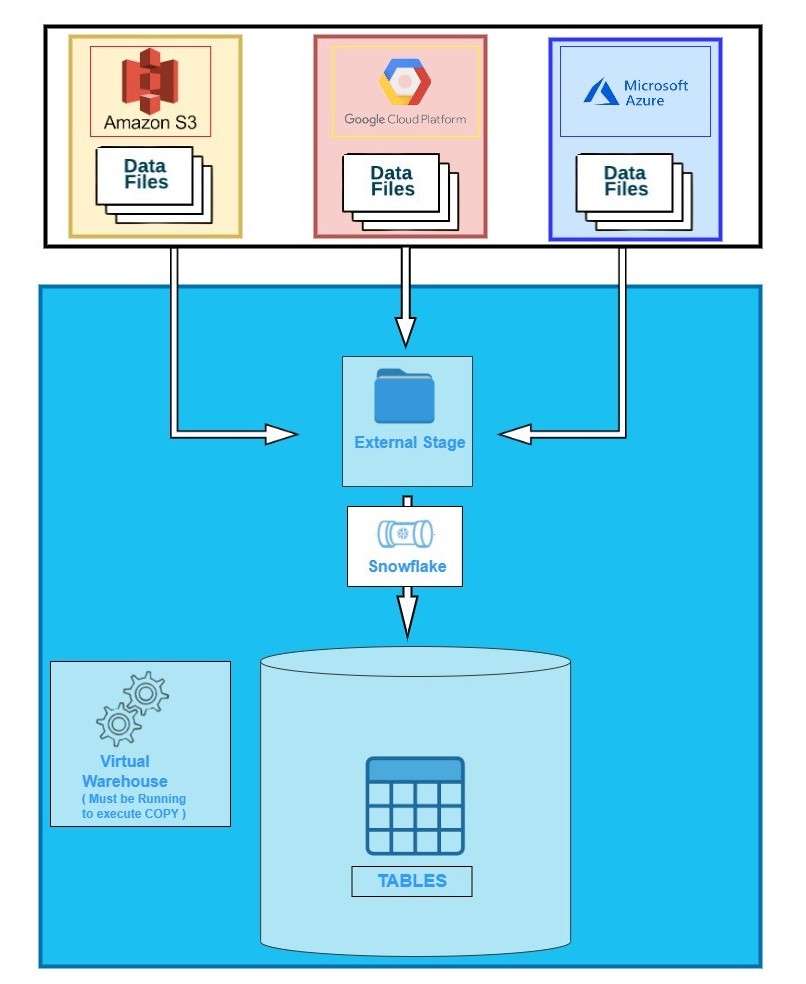

This is managed by a Business Entity used to load data from any of the following cloud storage services regardless of the cloud platform that hosts your Snowflake account.

Amazon S3

Google Cloud Platform

Microsoft Azure

A Named external stage is a database object created in a schema. This object stores the URL to files in cloud storage, the settings used to access the cloud storage account, and convenience settings such as the options that describe the format of staged files. Create stages using the CREATE STAGE command.

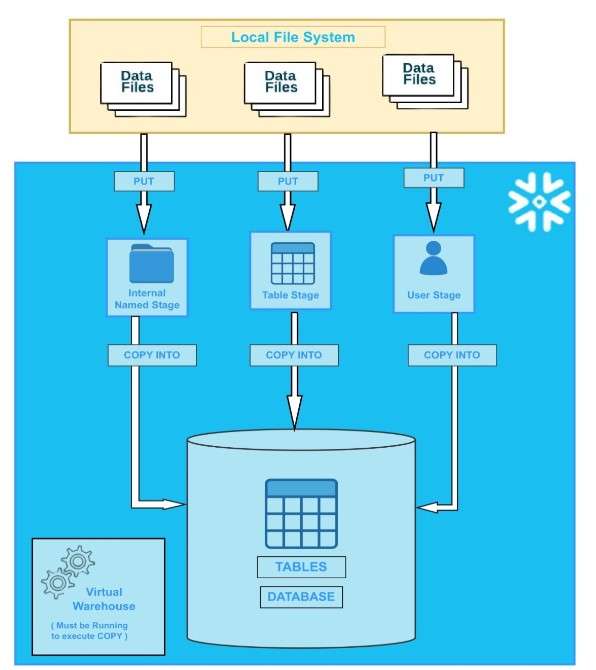

This is used to upload files to any of the internal stage types from your local file system using the PUT command. From that internal stage we can use COPY INTO TABLE command to load it into a snowflake table.

The internal stages are:

• User Stage

• Table Stage

• Internal Named Stage

User Stage: By default, each user in Snowflake has a Stage assigned to them for Storing Files. We can identify with “@~”.

Table Stage: By default, each table in Snowflake has a stage allocated to it for storing files. We can identify with “@%”.

Internal Named Stage: Internal Named stages are Database objects that can substitute for User stage and Table Stage. We can identify with “@”.

The COPY command is highly scalable, and it can load data in parallel, making it faster and more efficient.

Note: While loading data into table using COPY command, we can do simple transformations like Column reordering, column omission, casts etc.

Snowpipe is a continuous data ingestion service provided by Snowflake that enables loading of data from one or more sources which includes cloud storage platforms like Amazon S3, Azure Blob Storage, or Google Cloud Storage. This service can be used to make data loading automate and typically it is used in use cases where data needs to be updated frequently.

It is designed to load small volumes of data incrementally. Snowpipe loads data from files within minutes as soon as they are available in a stage. This means, you can load data from files in micro-batches, making it available to users within minutes rather than executing COPY statements on a schedule to load larger batches.

The recommended file size for data loading is 100-250MB (compressed).

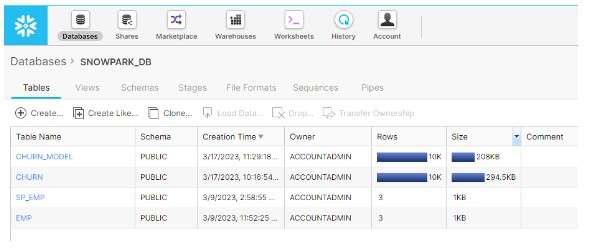

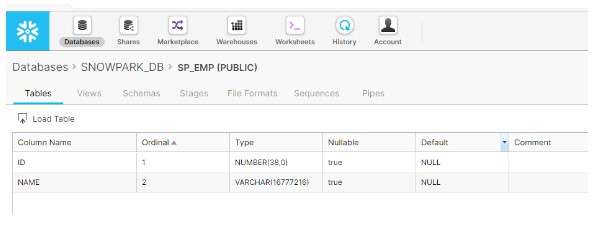

The classic web interface provides a convenient wizard for loading limited amounts of data into a table that is smaller than 50 MB in size. Behind the scenes, the wizard uses the PUT and COPY commands to load data. However, the wizard simplifies the data loading process by combining the two phases (staging files and loading data) into a single operation and deleting all staged files after the load completes.

This is suitable for small to medium sized datasets.

• First, we need to create a table using SQL command or Web Interface.

• Click on the table to Load Table.

The web interface is intuitive, user-friendly, and enables users to upload data without the need for complex scripting or programming skills.

Loading Data from Apache Kafka:

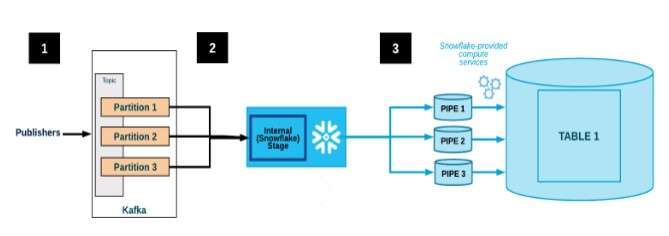

The Snowflake Connector for Kafka enables users to connect to an Apache Kafka server, read data from one or more topics, and load that data into Snowflake tables.

The process of loading data from Apache Kafka to Snowflake involves Kafka connector subscribes to one or more Kafka topics based on the configuration. The connector creates one internal stage to temporarily store data for each Kafka topic and also it creates snowpipe to ingest data files each topic partition.

It will create one table for each topic. If the table specified for each topic does not exist, the connector creates it; otherwise, the connector creates the RECORD_CONTENT and RECORD_METADATA columns in the existing table.

Once this is done, Kafka Connect will automatically read data from Kafka and write it to the Snowflake table.

Note: It supports JSON and AVRO file formats only.

When using Partner Connect tools, you can configure the data integration tool to extract data from various data sources such as databases, cloud storage, and other data repositories, transform the data to meet your specific requirements, and load the data into Snowflake. This process reduces the need for manual intervention, thus saving time and increasing the accuracy of the data.

Partner Connect provides a seamless integration experience, making it easier for users to load data into Snowflake using various integration tools, including cloud-native integration tools.

Supported File Formats: All ingestion methods support the most common file formats like:

- CSV, TSV

- JSON

- AVRO

- ORC

- PARQUET

- XML (supports as preview feature)

Narala Suvarna Anjali

Data Engineer

Boolean Data Systems

Anjali works as a Data Engineer at Boolean Data Systems and has built many end-end Data Engineering solutions. She is a SnowPro Core and Matillion Associate certified engineer who has proven skills and expertise with Snowflake, Matillion, Python to name a few.

Conclusion:

Snowflake provides a highly scalable and efficient solution for data ingestion, enabling seamless integration with a wide range of data sources. Choosing the right data ingestion pattern depends on factors such as the size of the data, the frequency of loading, and the data source.

About Boolean Data

Systems

Boolean Data Systems is a Snowflake Select Services partner that implements solutions on cloud platforms. we help enterprises make better business decisions with data and solve real-world business analytics and data problems.

Services and

Offerings

Solutions &

Accelerators

Global

Head Quarters

1255 Peachtree Parkway, Suite #4204, Alpharetta, GA 30041, USA.

Ph. : +1 678-261-8899

Fax : (470) 560-3866