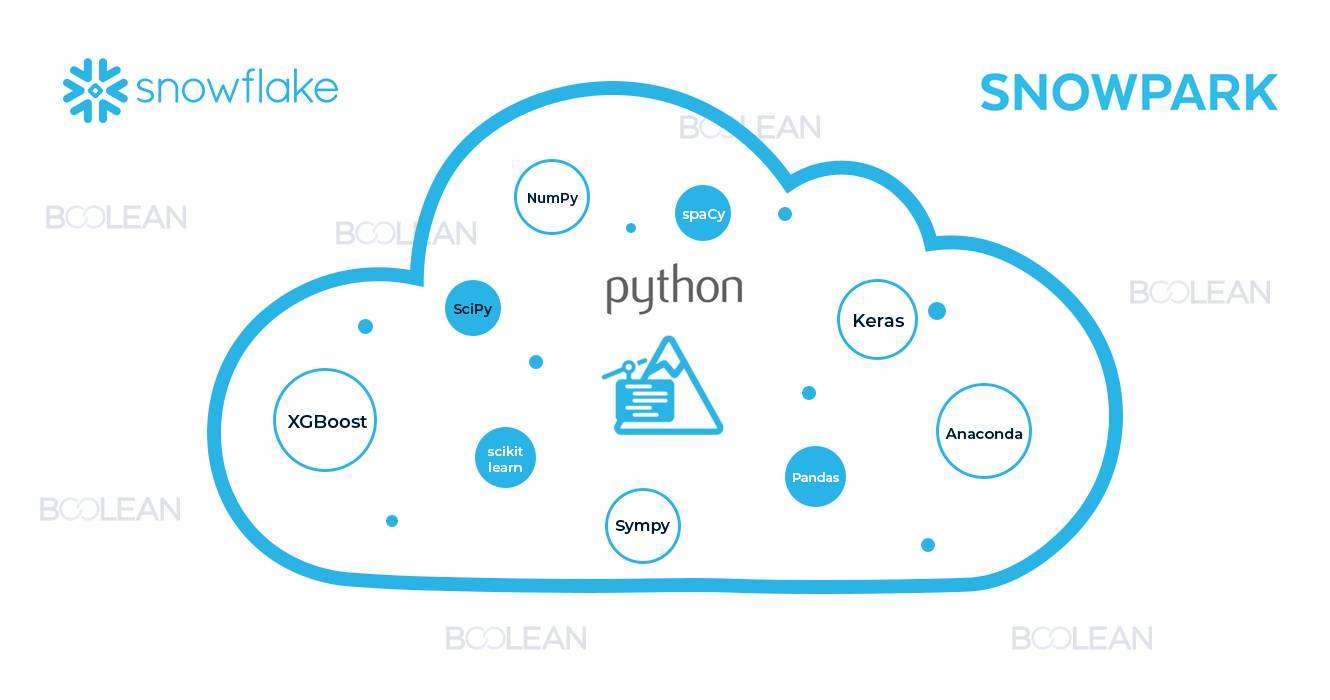

Snowpark, is a data engineering and data processing environment that enables you to perform big data processing and analytics. However, like any other technology, Snowpark comes with its own set of challenges.

In this blog, we will discuss some of the common challenges that users face while working with Snowpark in Snowflake Cloud Services.

1. Performance issues:

Snowpark is designed to handle large amounts of data, but performance issues can still occur when working with complex data structures or large amounts of data. This can lead to slow processing times and decreased efficiency.

We need to analyse the query performance by using snowflake built-in tools like query profiling etc.

Integrating Snowpark with other tools and platforms can sometimes be challenging. Compatibility issues between Snowpark and other tools or platforms can lead to integration problems, which can reduce efficiency.

Snowpark can be a complex environment, and users may find it challenging to understand and navigate. This can lead to longer development cycles and decreased efficiency.

Understand the anaconda packages and libraries that Snowpark will support.

Try to build models by using algorithms which will work with Snowpark more efficiently. For ex: XGboost algorithm is best, that is designed for data pipelines, streaming data etc..

Since, Snowpark supports limited versions of Anaconda packages, and it is a time taking process to find those versions.

Developer should understand the requirements of Snowpark, and then they have to find the required libraries or packages etc. with the suitable versions.

Before using Snowpark check with the Anaconda libraries which Snowpark will support.

Snowpark has its own data processing and storage limitations, which can lead to performance issues when processing large amounts of data or working with complex data structures.

We can use distributed computing techniques, optimize our data processing workflows, and choose the right hardware.

By following these steps, we can prepare our data for model fitting and improve the performance of our data processing workflows.

Snowflake and Snowpark are cloud-based services, and security concerns can be a concern for some organizations. Users should ensure that they have proper security measures in place, including data encryption and access controls, to protect sensitive data.

When working with Snowflake and Snowpark, users should implement proper security measures such as data encryption, access controls, authentication and authorization mechanisms, and network security measures to protect sensitive data.

While writing scripts avoid giving credentials directly in the script, as users can use config or parameter files.

While working on ML models, Snowpark supports Anaconda packages. But, it doesn’t have as many libraries as Anaconda package generally provides which is a time taking process and it may lead to low accurate results.

While Snowpark may not support all of the packages that are available in Anaconda, there may be alternative packages that can accomplish similar tasks. By researching for alternative packages, you may be able to find a solution that works for your use case.

Snowflake provides native support for some machine learning libraries, including TensorFlow and Scikit-learn. By using these built-in libraries, you may be able to perform your desired ML tasks without needing to rely on external packages.

If Snowpark doesn’t support the packages you need, you can consider using it in conjunction with other tools. For example, you can use Snowpark to retrieve and pre-process data from Snowflake, and then use other tools, such as Jupyter Notebook, to build and train ML models.

Snowpark is designed to work with Snowflake, but it may not be able to work with legacy data sources. This can be a challenge for organizations that need to work with data from older systems, as they may need to perform data migrations to get the data into Snowflake.

Organizations can consider several strategies. One approach is to perform data migrations to move the data from legacy systems into Snowflake. Although this can be a complex and time-consuming process, it may be necessary to fully utilize Snowpark’s capabilities.

Another approach is to use connectors that enable Snowpark to work with other systems. By using connectors, organizations may be able to access data from legacy systems without performing data migrations.

Organizations can also use external tools, such as ETL tools like Matillion, Fivetran, DBT Labs, Talend, or Apache Airflow, to extract and transform data from legacy systems and then load it into Snowflake for analysis with Snowpark. This approach may provide a more streamlined and efficient solution.

Organizations can consider implementing APIs that allow Snowpark to access legacy data sources directly. Although this approach may require more development work, it can be a viable option for organizations that need to work with legacy systems regularly.

Snowpark is a relatively new feature of Snowflake, and there may not be a lot of documentation or support available for users. This can make it challenging for users to find answers to questions or resolve issues that they encounter.

There are a few strategies that users can consider. First, they can leverage the existing Snowpark documentation and resources provided by Snowflake to the fullest extent possible. Although the documentation may be limited, it may still provide useful information and guidance to help users get started with Snowpark.

Another strategy is to join Snowflake’s online community, where users can ask questions and get support from other users and experts. The community is a great resource for finding answers to specific questions or issues that may not be covered in the documentation.

Users can also consider reaching out to Snowflake’s support team directly for help. Snowflake has few demos for building ML models and some sample predictions. To know more about machine learning, anaconda and Snowpark, snowflake has a git repository Snowflake lab.

Snowpark libraries and packages are optimized to work with Snowflake’s architecture and data processing capabilities, which may not be easily transferable to other data platforms or cloud providers.

There are several reasons why Snowpark libraries or packages may not be easily accessible outside of Snowflake.

1. Firstly, these libraries may have dependencies on other Snowflake-specific libraries or packages. They may not be readily available outside Snowflake.

2. Secondly, Snowpark libraries or packages are specifically tailored to work with Snowflake’s platform and infrastructure and may not be compatible with other data platforms or cloud providers. Additionally, Snowflake is known for its high-performance computing infrastructure, and Snowpark libraries or packages are optimized to work with this infrastructure. This means that they may not perform well on other platforms or environments.

3. Finally, Snowflake is known for its high level of security and data protection, and Snowpark libraries or packages may rely on these security features, making it difficult to use them securely outside of the Snowflake environment.

While it is possible to access Snowpark libraries from outside of Snowflake, it would require additional configuration and customization. The Snowflake REST API could be used to execute Snowpark scripts or queries stored in Snowflake from external applications. Another option would be to extract the Snowpark code from Snowflake and run it locally on your own system. However, you would need to ensure that any dependencies on Snowflake-specific functionalities are addressed and provide your own data source and infrastructure.

Here, we tried to build a sample ML model using python and stored it in an UDF and pushed the UDF to Snowflake stage by using Snowpark. This is just for understanding the Snowpark and its functionality.

In this we are taking article classification table from Snowflake which contains information of products like clothes, shoes and how the sale is going on fast, medium or slow.

Snowpark will support python with version 3.8 (As of March 2023)

from snowflake.snowpark.types import IntegerType, StringType, StructType, FloatType, StructField, DateType, Variant

from snowflake.snowpark.functions import udf, sum, col,array_construct,month,year,call_udf,lit

from snowflake.snowpark.version import VERSION

# Misc

import json

import pandas as pd

import numpy as np

# from snowfalke.snowpark import snowpark.sql

snowuser = ‘XXXXX’

snowpass = ‘XXXX’

snowacct = ‘XXXX’

print(‘connecting..’)

cnn_params = {

“account”: snowacct,

“user”: snowuser,

“password”: snowpass,

“warehouse”: “XXXX”,

“database”: “XXXX”,

“schema”: “XXXX”

}

session = Session.builder.configs(cnn_params).create()

print (“Snowflake Connection Succsess”)

snow_df_article = session.table(‘ARTICLE_TABLE’)

snow_df_article.queries

snow_df_article_transformed = snow_df_article.drop(snow_df_article[“ARTICLE”],

snow_df_article[“ARTICLE_DESCRIPTION”],

snow_df_article[“FRANCHISE”],

snow_df_article[“KCC”],

snow_df_article[“ARTICLE”],

snow_df_article[“BUSINESS_SEGMENT_DESC”],

snow_df_article[“PRODUCT_DIVISION”],

snow_df_article[“GENDER”],

snow_df_article[“PRODUCT_TYPE”],

snow_df_article[“PRODUCT_GROUP”],

snow_df_article[“SALES_LINE”],

snow_df_article[“COLOR_GROUP”],

snow_df_article[“TRAFFIC_STATUS”],

snow_df_article[“CONVERSION_STATUS”],

snow_df_article[“SIZE_AVAILABILITY_STATUS”],

snow_df_article[“STOCK_AVAILABILITY_STATUS”],

snow_df_article[“RETURN_RATE_STATUS”])

snow_df_article_transformed=snow_df_article_transformed.dropna()

snow_df_article_transformed.write.mode(‘overwrite’).save_as_table(‘Article_features’)

snow_df_article = session.table(‘Article_features’)

df = snow_df_article.toPandas()

features = [“RETURN_QTY”,”TRAFFIC”,”ORDERS”,”CONVERSION”,

“AVAILABLE_SIZES”,”TOTAL_SIZES”,

“SIZE_AVAILABILITY”,”STOCK_AVAILABILITY”,”RETURN_RATE”,

“ACTUAL_STATUS”,”AVG_DISCOUNT”,’TOTAL_OPP’,”AWC”,

“PARTNER_PROGRAM_DV”,”ID”]

df.replace({‘SLOW’:0,’FAST’:1,’MEDIUM’:2},inplace=True)

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(

df.drop(columns=[‘ACTUAL_STATUS’], axis=1), # predictive variables

df[‘ACTUAL_STATUS’], # target

test_size=0.2, # portion of dataset to allocate to test set

random_state=0, # we are setting the seed here

)

print(X_train.shape)

print(X_test.shape)

from sklearn.preprocessing import OrdinalEncoder

from sklearn.impute import SimpleImputer

from sklearn.preprocessing import MinMaxScaler

from sklearn.preprocessing import FunctionTransformer

#Classifier

from sklearn.ensemble import RandomForestClassifier

#Pipeline

from sklearn.pipeline import make_pipeline

from sklearn.model_selection import train_test_split

#Model Accuracy

from sklearn.metrics import balanced_accuracy_score

# Model Pipeline

ord_pipe = make_pipeline(

FunctionTransformer(lambda x: x.astype(str)) ,

OrdinalEncoder(handle_unknown=’use_encoded_value’, unknown_value=-1)

)

num_pipe = make_pipeline(

SimpleImputer(missing_values=np.nan, strategy=’constant’, fill_value=0),

MinMaxScaler()

)

clf = make_pipeline(RandomForestClassifier(random_state=0,n_estimators=250))

model = make_pipeline(ord_pipe, num_pipe, clf)

# fit the model

model.fit(X_train, y_train)

## accuracy testing

y_pred = model.predict_proba(X_test)[:,1]

predictions = [round(value) for value in y_pred]

balanced_accuracy = balanced_accuracy_score(y_test, predictions)

print(“Model testing completed.\n – Model Balanced Accuracy: %.2f%%” % (balanced_accuracy * 100.0))

## creation of stage

print(session.sql(‘create stage if not exists MODEL_STAGE_ART’).collect())

## creation of udf

session.add_packages(“scikit-learn==1.0.2”, “pandas”, “numpy”)

@udf(name=’Article_status_prediction’,is_permanent = True, stage_location = ‘@MODEL_STAGE_ART’, replace=True)

def predict_churn(args: list) -> float:

row = pd.DataFrame([args], columns=features)

return model.predict(row)

Anil Kumar L

Data Engineer

Boolean Data Systems

Anil works as a Data Engineer at Boolean Data Systems and has built many end-end Data Engineering solutions. He is a SnowPro Core and Matillion Associate certified engineer who has proven skills and expertise with Snowflake, Matillion, ML/ DL, Python, Streamlit to name a few.

Conclusion:

Snowpark is a powerful tool for data processing in the cloud, but like any technology, it has its share of challenges. Most frequently encountered issues are performance problems, difficulties for setting up and configuring the environment, and limitations in the Snowpark API. Fortunately, these problems can be reduced with some effort and expertise.

By following the documentation, troubleshooting steps, staying up to date with Snowpark releases, and taking help through Snowpark community, developers can improve the performance. Snowpark, indeed, can help organizations unlock the full potential of their data in the cloud.

About Boolean Data

Systems

Boolean Data Systems is a Snowflake Select Services partner that implements solutions on cloud platforms. we help enterprises make better business decisions with data and solve real-world business analytics and data problems.

Services and

Offerings

Solutions &

Accelerators

Global

Head Quarters

1255 Peachtree Parkway, Suite #4204, Alpharetta, GA 30041, USA.

Ph. : +1 678-261-8899

Fax : (470) 560-3866